Скачать с ютуб Agent OS: LLM OS Micro Architecture for Composable, Reusable AI Agents в хорошем качестве

Скачать бесплатно Agent OS: LLM OS Micro Architecture for Composable, Reusable AI Agents в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно Agent OS: LLM OS Micro Architecture for Composable, Reusable AI Agents или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон Agent OS: LLM OS Micro Architecture for Composable, Reusable AI Agents в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса savevideohd.ru

Agent OS: LLM OS Micro Architecture for Composable, Reusable AI Agents

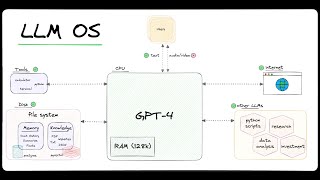

Agent OS is an architecture for building AI agents that focuses on IMMEDIATE results for today and over the long term. The LLM Ecosystem is ever evolving so in order to keep up, you'll need an architecture that has interchangeable parts that can be swapped in and out as needed. This is where the Agent OS comes in. A great architecture, can future proof your AI agents and make them more adaptable. The Agent OS is a micro architecture based off of Andrej Kaparthy's LLM OS. It's comprises three primary components: the Language Processing Unit (LPU), Input/Output (IO), and Random Access Memory (RAM). Each serves a unique purpose in the construction of AI agents, enabling you, the developer, to create systems that are not only efficient but also adaptable to the rapidly changing landscape of AI/LLM technology. The LPU, positioned at the core of the architecture, integrates model providers, individual models, prompts, and prompt chains into a cohesive unit. By storing all llm, and prompt related functionality into one component, the LPU, we can focus on prompt engineering and prompt testing around this unit of this Agent. This integration facilitates the creation of AI agents capable of solving specific problems with high precision. Thanks to the layered architecture, each piece can be swapped out. So when GPT-4.5 or GPT-5 rolls out, you can easily upgrade your AI agent without having to rebuild the entire system from scratch. The RAM component enables your AI agent to operate on state, allowing it to adapt to changing inputs and produce novel results. The IO layer, on the other hand, provides the tools (function calling) necessary for your AI agent to interact with the real world. This includes making web requests, interacting with databases, and monitoring the agent's performance through spyware. By monitoring your AI agent's state, inputs, and outputs, you can identify issues and make improvements to the system. In this video we dig into ideas of creating composable agents where the input of one agent can be the output of another agent. This is a powerful concept that can be used to create complex agents that can solve a wide range of problems. It's the evolution of the core idea agentic engineering is built on: The prompt is the new fundamental unit of programming and knowledge work. First you have llms, then prompts, then prompt chains, then AI Agents, and then Agentic Workflows. This is the future of programming and knowledge work. 🧠 Andrej Karpathy’s LLM OS • [1hr Talk] Intro to Large Language Mo... 🔗 7 Prompt Chains for Powerful AI Agents • 7 Prompt Chains for Decision Making, ... 💻 Everything is a Function • How to Engineer Multi-Agent Tools: Yo... 🔍 Multi Agent Spyware • AutoGen SPYWARE: Coding Systems for S... 📖 Chapters 00:00 Best way to build AI Agents? 00:39 Agent OS 01:58 Big Ideas (Summary) 02:48 Breakdown Agent OS: LPU, RAM, I/O 04:03 Language Processing Unit (LPU) 05:42 Is this over engineering? 07:30 Memory, Context, State (RAM) 08:20 Tools, Function Calling, Spyware (I/O) 10:22 How do you know your Architecture is good? 13:27 Agent Composability 16:40 What's missing from Agent OS? 18:53 The Prompt is the... #aiagent #llm #architecture