Скачать с ютуб Ji Lin's PhD Defense, Efficient Deep Learning Computing: From TinyML to Large Language Model. @MIT в хорошем качестве

Скачать бесплатно Ji Lin's PhD Defense, Efficient Deep Learning Computing: From TinyML to Large Language Model. @MIT в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно Ji Lin's PhD Defense, Efficient Deep Learning Computing: From TinyML to Large Language Model. @MIT или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон Ji Lin's PhD Defense, Efficient Deep Learning Computing: From TinyML to Large Language Model. @MIT в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса savevideohd.ru

Ji Lin's PhD Defense, Efficient Deep Learning Computing: From TinyML to Large Language Model. @MIT

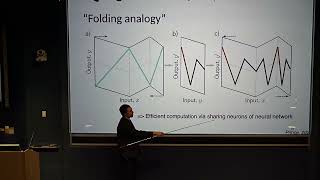

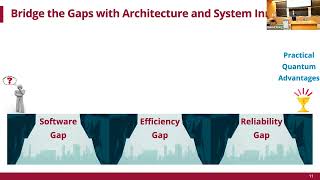

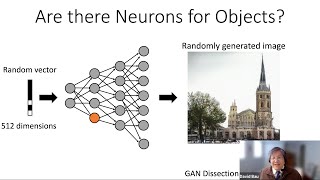

Ji Lin completed his PhD degree from MIT EECS in December 2023, advised by Prof. Song Han. His research focuses on efficient deep learning computing for ML and accelerating large language models (LLMs). Ji is pioneering the research in the field of TinyML, including MCUNet, MCUNetV2, MCUNetV3 (on-device training), AMC, TSM (highlighted and integrated by NVIDIA), AnyCost GAN. Recently, he proposed SmoothQuant (W8A8) and AWQ (W4A16) for quantization of LLMs, which has been widely integrated by industry solutions (NVIDIA FasterTranformer/TensorRT-LLM, Intel Neural Compressor/Q8Chat, FastChat, vLLM, HuggingFace Transformers/TGI, LMDeploy, etc.). His work has been covered by MIT Tech Review, MIT News (twice on MIT homepage and four times on MIT News), WIRED, Engadget, VentureBeat, etc. His research has received over 8,500 citations on Google Scholar and over 8,000 stars on GitHub. Ji is an NVIDIA Graduate Fellowship Finalist in 2020, and Qualcomm Innovation Fellowship recipient in 2022. Ji is the TA of the efficientml.ai course (MIT 6.5940, Fall 2023).