Скачать с ютуб XGBoost Made Easy | Extreme Gradient Boosting | AWS SageMaker в хорошем качестве

Скачать бесплатно XGBoost Made Easy | Extreme Gradient Boosting | AWS SageMaker в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно XGBoost Made Easy | Extreme Gradient Boosting | AWS SageMaker или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон XGBoost Made Easy | Extreme Gradient Boosting | AWS SageMaker в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса savevideohd.ru

XGBoost Made Easy | Extreme Gradient Boosting | AWS SageMaker

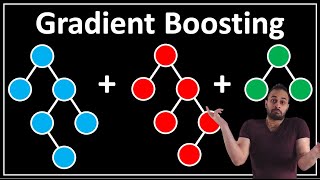

Recently, XGBoost is the go to algorithm for most developers and has won several Kaggle competitions. Since the technique is an ensemble algorithm, it is very robust and could work well with several data types and complex distributions. Xgboost has a many tunable hyperparameters that could improve model fitting. XGBoost is an example of ensemble learning and works for both regression and classification tasks. Ensemble techniques such as bagging and boosting can offer an extremely powerful algorithm by combining a group of relatively weak/average ones. For example, you can combine several decision trees to create a powerful random forest algorithm. By Combining votes from a pool of experts, each will bring their own experience and background to solve the problem resulting in a better outcome. Boosting can reduce variance and overfitting and increase the model robustness. I hope you will enjoy this video and find it useful and informative! Thanks. #xgboost #aws #sagemaker