Скачать с ютуб Transformers explained | The architecture behind LLMs в хорошем качестве

Скачать бесплатно Transformers explained | The architecture behind LLMs в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно Transformers explained | The architecture behind LLMs или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон Transformers explained | The architecture behind LLMs в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса savevideohd.ru

Transformers explained | The architecture behind LLMs

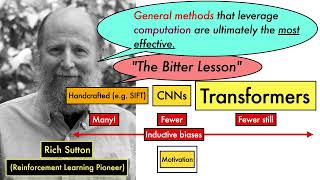

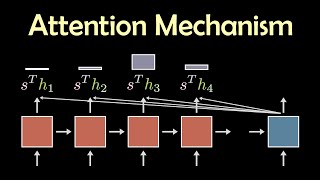

All you need to know about the transformer architecture: How to structure the inputs, attention (Queries, Keys, Values), positional embeddings, residual connections. Bonus: an overview of the difference between Recurrent Neural Networks (RNNs) and transformers. 9:19 Order of multiplication should be the opposite: x1(vector) * Wq(matrix) = q1(vector). Otherwise we do not get the 1x3 dimensionality at the end. Sorry for messing up the animation! ➡️ AI Coffee Break Merch! 🛍️ https://aicoffeebreak.creator-spring.... Outline: 00:00 Transformers explained 00:47 Text inputs 02:29 Image inputs 03:57 Next word prediction / Classification 06:08 The transformer layer: 1. MLP sublayer 06:47 2. Attention explained 07:57 Attention vs. self-attention 08:35 Queries, Keys, Values 09:19 Order of multiplication should be the opposite: x1(vector) * Wq(matrix) = q1(vector). 11:26 Multi-head attention 13:04 Attention scales quadratically 13:53 Positional embeddings 15:11 Residual connections and Normalization Layers 17:09 Masked Language Modelling 17:59 Difference to RNNs Thanks to our Patrons who support us in Tier 2, 3, 4: 🙏 Dres. Trost GbR, Siltax, Vignesh Valliappan, @Mutual_Information , Kshitij Our old Transformer explained 📺 video: • The Transformer neural network archit... 📺 Tokenization explained: • What is tokenization and how does it ... 📺 Word embeddings: • How modern search engines work – Vect... 📽️ Replacing Self-Attention: • Replacing Self-attention 📽️ Position embeddings: • Positional encodings in Transformers ... @SerranoAcademy Transformer series: • The Attention Mechanism in Large Lang... 📄 Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. "Attention is all you need." Advances in neural information processing systems 30 (2017). ▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀ 🔥 Optionally, pay us a coffee to help with our Coffee Bean production! ☕ Patreon: / aicoffeebreak Ko-fi: https://ko-fi.com/aicoffeebreak ▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀ 🔗 Links: AICoffeeBreakQuiz: / aicoffeebreak Twitter: / aicoffeebreak Reddit: / aicoffeebreak YouTube: / aicoffeebreak #AICoffeeBreak #MsCoffeeBean #MachineLearning #AI #research Music 🎵 : Sunset n Beachz - Ofshane Video editing: Nils Trost